EMG Prediction

Models

After recording, processing, and extracting features from a window of EMG data, it is passed to a machine learning algorithm for prediction. These control systems have evolved in the prosthetics community for continuously predicting muscular contractions for enabling prosthesis control. Therefore, they are primarily limited to recognizing static contractions (e.g., hand open/close and wrist flexion/extension) as they have no temporal awareness. Currently, this is the form of recognition supported by LibEMG and is an initial step to explore EMG as an interaction opportunity for general-purpose use. This section highlights the machine-learning strategies that are part of LibEMG’s pipeline.

There are three types of models supported in LibEMG: classifiers, regressors, and discrete classifiers. Classifiers output a motion class for each window of EMG data, whereas regressors output a continuous prediction along a degree of freedom. Discrete classifiers are designed for recognizing transient, isolated gestures and operate on variable-length templates rather than individual windows. For classifiers and regressors, LibEMG supports statistical models as well as deep learning models. Additionally, a number of post-processing methods (i.e., techniques to improve performance after prediction) are supported for all models.

Statistical Models

The statistical models (i.e., traditional machine learning methods) implemented leverage the sklearn package. For most cases, the “base” models use the default options, meaning that the pre-defined models are not necessarily optimal. However, the parameters attribute can be used when initializing the models to pass in additional sklearn parameters in a dictionary. For example, looking at the RandomForestClassifier docs on sklearn:

A classifier with any of those parameters using the parameters attribute. For example:

parameters = {

'n_estimators': 99,

'max_depth': 20,

'random_state': 5,

'max_leaf_nodes': 10

}

classifier.fit(data_set, parameters=parameters)

The same process can be done using the RandomForestRegressor from sklearn and an EMGRegressor. Please reference the sklearn docs for parameter options for each model.

Additionally, custom models can be created. Any custom classifier should be modeled after the sklearn classifiers and must have the fit, predict, and predict_proba functions to work correctly. Any custom regressor should be modeled after the sklearn regressors and must have the fit and predict methods.

from sklearn.ensemble import RandomForestClassifier

from libemg.predictor import EMGClassifier

rf_custom_classifier = RandomForestClassifier(max_depth=5, random_state=0)

classifier = EMGClassifier(rf_custom_classifier)

classifier.fit(data_set)

Deep Learning (Pytorch)

Another available option is to use pytorch models (i.e., a library for deep learning) to train the model, although this involves making some custom code for preparing the dataset and the deep learning model. For a guide on how to use deep learning models, consult the deep learning example. The same methods are expected to be implemented for both deep and statistical classifiers/regressors.

Classifiers

Below is a list of the classifiers that can be instatiated by passing in a string to the EMGClassifier. For other classifiers, pass in a custom model that has the fit, predict, and predict_proba methods.

Linear Discriminant Analysis (LDA)

A linear classifier that uses common covariances for all classes and assumes a normal distribution.

classifier = EMGClassifier('LDA')

classifier.fit(data_set)

Check out the LDA docs here.

K-Nearest Neighbour (KNN)

Discriminates between inputs using the K closest samples in feature space. The implemented version in the library defaults to k = 5. A commonly used classifier for EMG-based recognition.

params = {'n_neighbors': 5} # Optional

classifier = EMGClassifier('KNN')

classifier.fit(data_set, parameters=params)

Check out the KNN docs here.

Support Vector Machines (SVM)

A hyperplane that maximizes the distance between classes is used as the boundary for recognition. A commonly used classifier for EMG-based recognition.

classifier = EMGClassifier('SVM')

classifier.fit(data_set)

Check out the SVM docs here.

Artificial Neural Networks (MLP)

A deep learning technique that uses human-like “neurons” to model data to help discriminate between inputs. Especially for this model, we highly recommend you create your own.

classifier = EMGClassifier('MLP')

classifier.fit(data_set)

Check out the MLP docs here.

Random Forest (RF)

Uses a combination of decision trees to discriminate between inputs.

classifier = EMGClassifier('RF')

classifier.fit(data_set)

Check out the RF docs here.

Quadratic Discriminant Analysis (QDA)

A quadratic classifier that uses class-specific covariances and assumes normally distributed classes.

classifier = EMGClassifier('QDA')

classifier.fit(data_set)

Check out the QDA docs here.

Gaussian Naive Bayes (NB)

Assumes independence of all input features and normally distributed classes.

classifier = EMGClassifier('NB')

classifier.fit(data_set)

Check out the NB docs here.

Discrete Classifiers

Unlike continuous classifiers that output a prediction for every window of EMG data, discrete classifiers are designed for recognizing transient, isolated gestures. These classifiers operate on variable-length templates (sequences of windows) and are well-suited for detecting distinct movements like finger snaps, taps, or quick hand gestures.

Discrete classifiers expect input data in a different format than continuous classifiers:

Continuous classifiers: Operate on individual windows of shape

(n_windows, n_features).Discrete classifiers: Operate on templates (sequences of windows) where each template has shape

(n_frames, n_features)and can vary in length.

To prepare data for discrete classifiers, use the discrete=True parameter when calling parse_windows() on your OfflineDataHandler:

from libemg.data_handler import OfflineDataHandler

odh = OfflineDataHandler()

odh.get_data('./data/', regex_filters)

windows, metadata = odh.parse_windows(window_size=50, window_increment=10, discrete=True)

# windows is now a list of templates, one per file/rep

For feature extraction with discrete data, use the discrete=True parameter:

from libemg.feature_extractor import FeatureExtractor

fe = FeatureExtractor()

features = fe.extract_features(['MAV', 'ZC', 'SSC', 'WL'], windows, discrete=True, array=True)

# features is a list of arrays, one per template

Majority Vote LDA (MVLDA)

A classifier that applies Linear Discriminant Analysis (LDA) to each frame within a template and uses majority voting to determine the final prediction. This approach is simple yet effective for discrete gesture recognition.

from libemg._discrete_models import MVLDA

model = MVLDA()

model.fit(train_features, train_labels)

predictions = model.predict(test_features)

probabilities = model.predict_proba(test_features)

Dynamic Time Warping Classifier (DTWClassifier)

A template-matching classifier that uses Dynamic Time Warping (DTW) distance to compare test samples against stored training templates. DTW is particularly useful when gestures may vary in speed or duration, as it can align sequences with different temporal characteristics.

from libemg._discrete_models import DTWClassifier

model = DTWClassifier(n_neighbors=3)

model.fit(train_features, train_labels)

predictions = model.predict(test_features)

probabilities = model.predict_proba(test_features)

The n_neighbors parameter controls how many nearest templates are used for voting (k-nearest neighbors with DTW distance).

Pretrained Myo Cross-User Model (MyoCrossUserPretrained)

A pretrained deep learning model for cross-user discrete gesture recognition using the Myo armband. This model uses a convolutional-recurrent architecture and recognizes 6 gestures: Nothing, Close, Flexion, Extension, Open, and Pinch.

from libemg._discrete_models import MyoCrossUserPretrained

model = MyoCrossUserPretrained()

# Model is automatically downloaded on first use

# The model provides recommended parameters for OnlineDiscreteClassifier

print(model.args)

# {'window_size': 10, 'window_increment': 5, 'null_label': 0, ...}

predictions = model.predict(test_data)

probabilities = model.predict_proba(test_data)

This model expects raw windowed EMG data (not extracted features) with shape (batch_size, seq_len, n_channels, n_samples).

Online Discrete Classification

For real-time discrete gesture recognition, use the OnlineDiscreteClassifier:

from libemg.emg_predictor import OnlineDiscreteClassifier

from libemg._discrete_models import MyoCrossUserPretrained

# Load pretrained model

model = MyoCrossUserPretrained()

# Create online classifier

classifier = OnlineDiscreteClassifier(

odh=online_data_handler,

model=model,

window_size=model.args['window_size'],

window_increment=model.args['window_increment'],

null_label=model.args['null_label'],

feature_list=model.args['feature_list'], # None for raw data

template_size=model.args['template_size'],

min_template_size=model.args['min_template_size'],

gesture_mapping=model.args['gesture_mapping'],

buffer_size=model.args['buffer_size'],

rejection_threshold=0.5,

debug=True

)

# Start recognition loop

classifier.run()

Creating Custom Discrete Classifiers

Any custom discrete classifier should implement the following methods to work with LibEMG:

fit(x, y): Train the model wherexis a list of templates andyis the corresponding labels.predict(x): Return predicted class labels for a list of templates.predict_proba(x): Return predicted class probabilities for a list of templates.

class CustomDiscreteClassifier:

def __init__(self):

self.classes_ = None

def fit(self, x, y):

# x: list of templates (each template is an array of frames)

# y: labels for each template

self.classes_ = np.unique(y)

# ... training logic

def predict(self, x):

# Return array of predictions

pass

def predict_proba(self, x):

# Return array of shape (n_samples, n_classes)

pass

Regressors

Below is a list of the regressors that can be instatiated by passing in a string to the EMGRegressor. For other regressors, pass in a custom model that has the fit and predict methods.

Linear Regression (LR)

A linear regressor that aims to minimize the residual sum of squares between the predicted values and the true targets.

regressor = EMGRegressor('LR')

regressor.fit(data_set)

Check out the LR docs here.

Support Vector Machines (SVM)

A regressor that uses a kernel trick to find the hyperplane that best fits the data.

regressor = EMGRegressor('SVM')

regressor.fit(data_set)

Check out the SVM docs here.

Artificial Neural Networks (MLP)

A deep learning technique that uses human-like “neurons” to model data to help discriminate between inputs. Especially for this model, we highly recommend you create your own.

regressor = EMGRegressor('MLP')

regressor.fit(data_set)

Check out the MLP docs here.

Random Forest (RF)

Uses a combination of decision trees to discriminate between inputs.

regressor = EMGRegressor('RF')

regressor.fit(data_set)

Check out the RF docs here.

Gradient Boosting (GB)

Additive model that fits a regression tree on the negative gradient of the loss function.

regressor = EMGRegressor('GB')

regressor.fit(data_set)

Check out the GB docs here.

Post-Processing

Classification

Rejection

Classifier outputs are overridden to a default or inactive state when the output decision is uncertain. This concept stems from the notion that it is often better (less costly) to incorrectly do nothing than it is to erroneously activate an output.

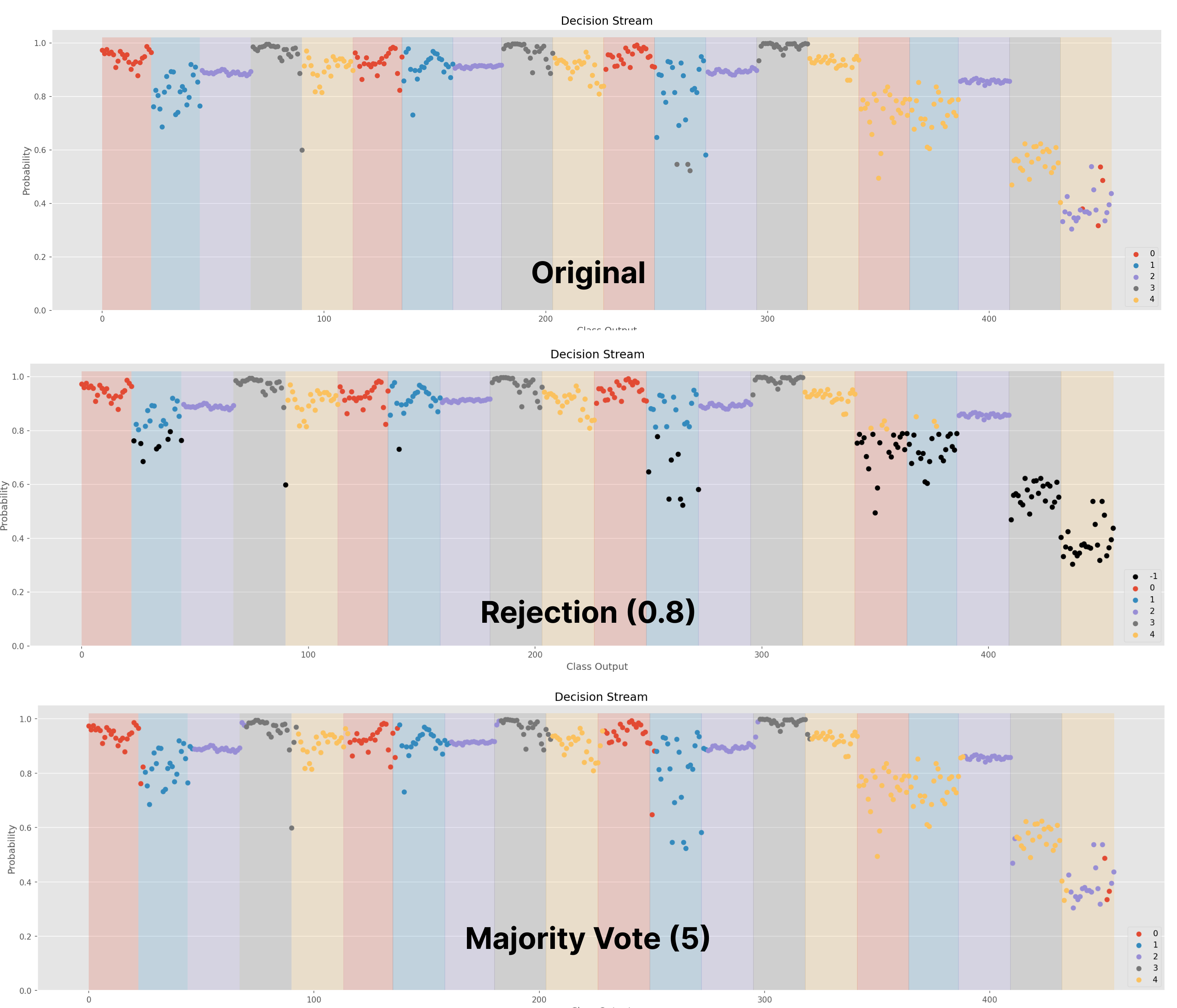

Confidence [1]: Rejects based on a predefined confidence threshold (between 0-1). If predicted probability is less than the confidence threshold, the decision is rejected. Figure 1 exemplifies rejection using an SVM classifier with a threshold of 0.8.

# Add rejection with 90% confidence threshold

classifier.add_rejection(threshold=0.9)

Majority Voting [2,3]

Overrides the current output with the label corresponding to the class that occurred most frequently over the past \(N\) decisions. As a form of simple low-pass filter, this introduces a delay into the system but reduces the likelihood of spurious false activations. Figure 1 exemplifies applying a majority vote of 5 samples to a decision stream.

# Add majority vote on 10 samples

classifier.add_majority_vote(num_samples=10)

Velocity Control [4]

Outputs an associated velocity with each prediction that estimates the level of muscular contractions (normalized by the particular class). This means that within the same contraction, users can contract harder or lighter to control the velocity of a device. Note that ramp contractions should be accumulated during the training phase.

# Add velocity control

classifier.add_velocity(train_windows, train_labels)

Figure 1 shows the decision stream (i.e., the predictions over time) of a classifier with no post-processing, rejection, and majority voting. In this example, the shaded regions show the ground truth label, whereas the colour of each point represents the predicted label. All black points indicate predictions that have been rejected.

Figure 1: Decision Stream of No Post-Processing, Rejection, and Majority Voting. This can be created using the .visualize() method call.

Regression

Deadband [5]

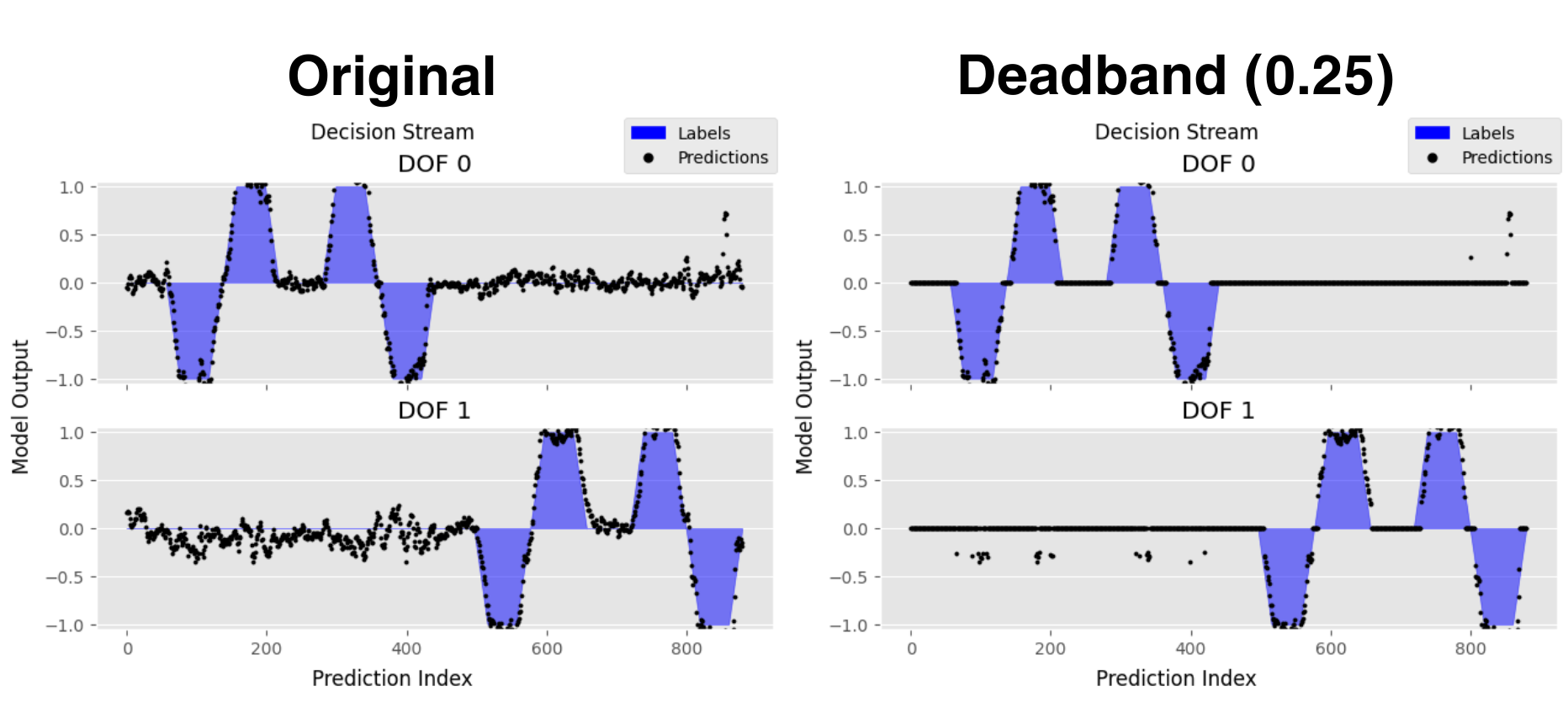

Modifies a regressor’s output based on whether the prediction’s magnitude is above a certain threshold. Any value whose magnitude is less than the defined threshold is output as 0. This preprocessing technique is typically used to combat drift at lower amplitudes.

# Add deadband to regressor (i.e., values with magnitude < 0.25 will be output as 0)

regressor.add_deadband(0.25)

Figure 2 shows the decision stream of a regressor with no post-processing and deadband thresholding. In this visualization, the shaded blue regions are the ground truth and each black dot corresponds to a single prediction. Predictions for each degree of freedom (DOF) are plotted on separate subplots for visual clarity.

Figure 2: Decision Stream of Regressor with No Post-Processing and Deadband Thresholding. This can be created using the regressor's .visualize() method call.

References

[1] E. J. Scheme, B. S. Hudgins and K. B. Englehart, “Confidence-Based Rejection for Improved Pattern Recognition Myoelectric Control,” in IEEE Transactions on Biomedical Engineering, vol. 60, no. 6, pp. 1563-1570, June 2013, doi: 10.1109/TBME.2013.2238939.

[2] Scheme E, Englehart K. Training Strategies for Mitigating the Effect of Proportional Control on Classification in Pattern Recognition Based Myoelectric Control. J Prosthet Orthot. 2013 Apr 1;25(2):76-83. doi: 10.1097/JPO.0b013e318289950b. PMID: 23894224; PMCID: PMC3719876.

[3] Wahid MF, Tafreshi R, Langari R. A Multi-Window Majority Voting Strategy to Improve Hand Gesture Recognition Accuracies Using Electromyography Signal. IEEE Trans Neural Syst Rehabil Eng. 2020 Feb;28(2):427-436. doi: 10.1109/TNSRE.2019.2961706. Epub 2019 Dec 23. PMID: 31870989.

[4] E. Scheme, B. Lock, L. Hargrove, W. Hill, U. Kuruganti and K. Englehart, “Motion Normalized Proportional Control for Improved Pattern Recognition-Based Myoelectric Control,” in IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 22, no. 1, pp. 149-157, Jan. 2014, doi: 10.1109/TNSRE.2013.2247421.

[5] A. Ameri, E. N. Kamavuako, E. J. Scheme, K. B. Englehart, and P. A. Parker, “Support vector regression for improved real-time, simultaneous myoelectric control,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 22, no. 6, pp. 1198–1209, Nov. 2014, doi: 10.1109/TNSRE.2014.2323576.

[Sklearn] Fabian Pedregosa, Gaël Varoquaux, Alexandre Gramfort, Vincent Michel, Bertrand Thirion, Olivier Grisel, Mathieu Blondel, Peter Prettenhofer, Ron Weiss, Vincent Dubourg, Jake Vanderplas, Alexandre Passos, David Cournapeau, Matthieu Brucher, Matthieu Perrot, and Édouard Duchesnay. 2011. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 12, null (2/1/2011), 2825–2830.